Smoke Detection

A successful endoscopic intervention depends upon ensuring proper working conditions, such as skilful camera handling, adequate lighting and removal of confounding factors, such as fluids or smoke. Smoke is an undesirable by-product of electro- or laser cauterization of the tissue to prevent or stop bleeding during a surgery. However, smoke does not only constitute a health hazard for the treated patient and the medical staff but it can also considerably obstruct the operating physician’s field of view. Therefore, the gaseous matter is removed by using specialized smoke evacuation systems that typically are activated manually. However, this action can easily be forgotten or neglected, potentially leading to a situation where the operating surgeon’s view is severely obstructed by smoke Although, there are some automated evacuation systems available, they depend on highly-specialized, operating room approved, and hence expensive, sensors. Additionally, automatic evacuation systems often start whenever the surgical device (e.g. a laser) is activated without checking if this is actually necessary (i.e., smoke is obstructing the surgeon’s vision). Unfortunately, such a simple evacuation strategy pressure loss which is problematic. Thus, modern smoke evacuation systems compensate this deficiency by inducing a surgical gas (e.g., CO2) in order to prevent bodily cavities from collapsing. In order to reduce the consumption of these gases it would be beneficial to limit the time that smoke evacuation takes place.

Naturally, automatic evacuation that is only performed when smoke is detected would represent an optimal solution for both the inconvenience of manual evacuator operation and the wasting of valuable resources. Thus, we propose an alternate approach using real-time image analysis to automatically activate smoke evacuation systems only when they are needed. Detecting smoke using image analysis falls into the general category of binary classification tasks. To solve this task we propose several approaches [1,2]. Two algorithms are based on saturation histogram thresholding and three approaches apply utilize CNN based image analysis.

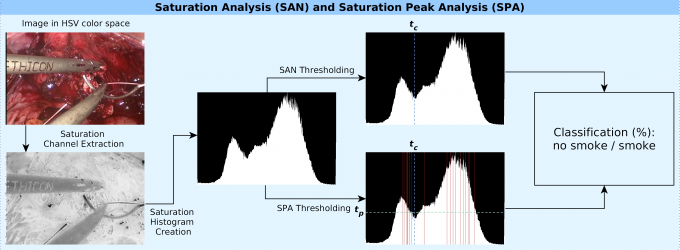

Our first approach to detect smoke is based on the fact that regions of smoke in endoscopic images tend to be grayish or rather colorless. Thus, it seems appropriate to use the saturation component of the HSV color model to detect such regions. However, this approach may produce false positives for other colorless entities (e.g., instruments and reflections). Hence, naively observing the saturation value of a video frame will only yield moderate classification results. However, we found that by using the saturation histogram of a frame, we can determine colorlessness, compensating for insignificant non-smoke influences. In particular, histograms of frames showing smoke will comprise higher values in their lower bins, whereas the opposite is true for non-smoke frames. We call this approach Saturation Analysis (SAN). A variant of this approach, which we call Saturation Peak Analysis (SPA), only inspects significant local bin maxima, i.e. peaks in the histrograms shape.

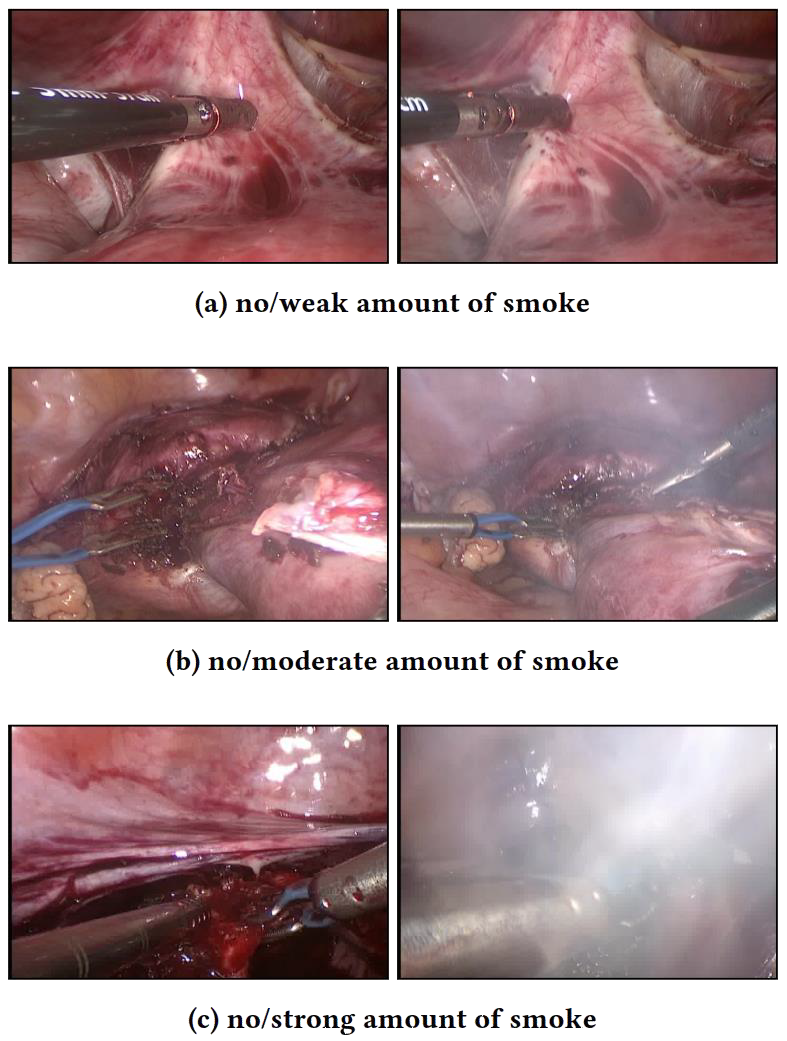

Half of these images show smoke in various intensities, while the other half depicts no visual signs of smoke. Using these samples we apply image saturation analyses and convolutional neural network (CNN) based learning techniques in order to automatically detect the emergence of smoke in real-time. A development prototype utilizing a GoogLeNet-based detection methodology is presented in the video below.

In both approaches the classification is based on relating the number of peaks below a classification threshold tc (cf. blue vertical dashed line in the figure) to the ones above, yielding prediction confidences predS for smoke as well as predNS for non-smoke. In SPA only peaks above a certain threshold (i.e., tp in the figure) are considered. Additionally, a peak’s total width must at least be five bins to eliminate small outliers exhibiting very similar saturation values (e.g. gray instruments). One drawback of these methods is that the threshold tc that yields the best classification results is not the same for each video, since there are distinguishable differences in saturation histograms of different videos. However, evaluation results have shown that values between 0,2 and 0,4 provide good classification results in different test sets.

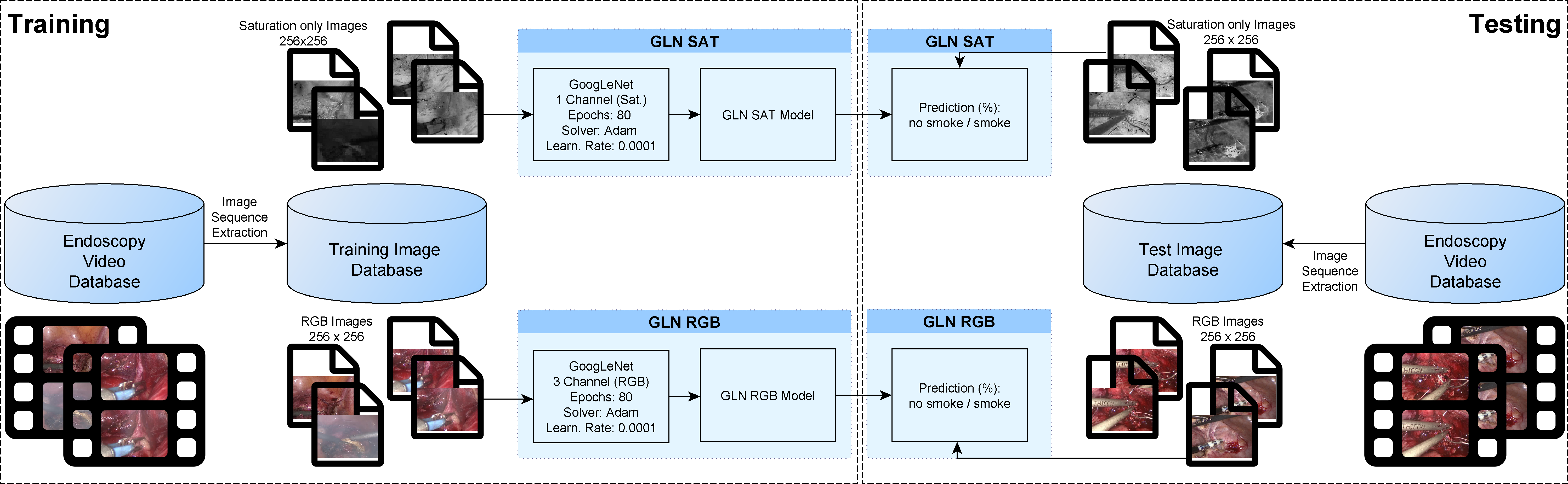

Promising image classification results using deep neural networks, in particular Convolutional Neural Networks (CNN), motivated us to utilize them the task of smoke detection. In particular, we use the 22-layered GoogLeNet [3] and the 8-layered AlexNet [4] neural networks. For training these networks, we use about 20.000 RGB images from a test set comprising 30.000 images from different laproscopic surgeries. The fraction of frames showing various degrees of smoke is around 50%. The resulting GoogLeNet is denoted as GLN RGB and the AlexNet is denoted as ALEX RGB. Additionally, we train the GoogLeNet with grayscale images, which only depict the saturation channel of the HSV color model. This approach, which we refer to as GLN SA,T allows us to better compare a trained CNN to the aforementioned SAN and SPA approaches, which also utiliize saturation.

For evaluation of the aforementioned five approaches we performed a series of experiments with three different datasets. The first dataset is comprised by the 10.000 images remaining from the dataset described above (DS A) which were all taken under the same circumstances (e.g., the same endoscope was used). The second dataset (DS B) consists of 4.500 laprascopy images taken from another source. Compared to DS A, DS B offers a different lighting situation and hence the color scheme differs significantly from the one represented in DS A. Finally, we also extracted and manually annotated around 100.000 frames from the publicly available Cholec80 dataset [5], which we denote as DS C (available here). Since the videos in the Cholec80 dataset contain a black border, which is characteristic for endoscopic videos, we only consider a rectangular region in the center of the frame and not the entire frame for histogram calculation and training the CNNs. The number of frames showing different degrees of smoke is about 50% in all datasets.

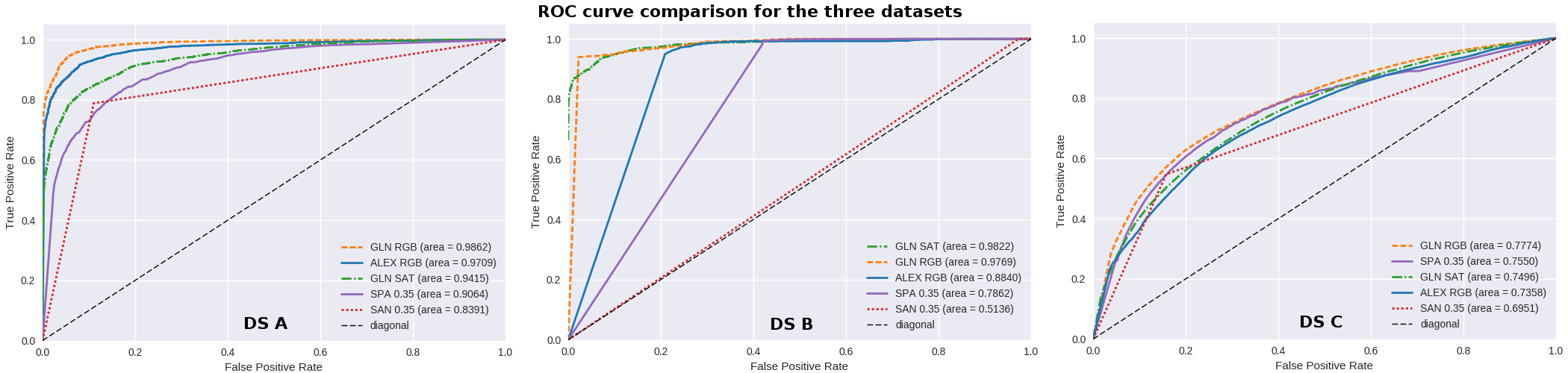

We use receiver operating characteristic (ROC) curves to visualize the classification results of the five approaches. The ROC curve is created by plotting the true positive rate against the false positive rate for different prediction confidences. The area under the curve expresses the performance of a classifier, where an area of 1.0 identifies a perfect classifier and 0.5 a classifier that is not better than a random classifier.

GLN RGB performs best in two of the three datasets and only performs slighty worse than GLN SAT in DS B. On the other end of the scale, SAN performs worst in all datasets. Especially, in DS B it just slighty works better than a random classifier. Although, SPA performs worse than the CNN based approaches for the sets DS A and DS B, it still provides good performance. Interestingly, SPA achieves the second best performance in DS C. In general it can be said that DS C is the hardest dataset and the best performing approach (i.e., GLN RGB) only achieves an area below curve value of 0,77. This may be caused by the size of the dataset which is significantly larger than the size of the other two datasets and by the fact that the CNN-based approaches have been only trained on DS A. Although, the histogram thresholding approaches achieve a lower classification performance, they clearly outperform the CNN-based approaches in terms of runtime performance. In particular, only SAN and SPA can achieve real-time performance for a 1080p/25fps video, whereas the CNN-based approaches need about 100 to 150 ms to process one frame. Since smoke slowly develops across several frames, we believe that dropping frames might suffice to provide a CNN-based real-time classification. However, the implications of frame dropping need to be more thoroughly explored in future studies. Additionally, we want to improve CNN-based methods by using the extensive dataset DS C. Another topic for future work is the combination of thresholding observations with CNN model predictions.

References

- A. Leibetseder, MJ. Primus, S. Petscharnig, K. Schoefmann, “Image-based Smoke Detection in Laparoscopic Videos,” Proc. of the Int. Conf. on Medical Image Computing and Computer Assisted Intervention, 2017. pp. 1-15.

- ,4. A. Leibetseder, MJ. Primus, S. Petscharnig, K. Schoefmann, “Real-Time Image-based Smoke Detection in Endoscopic Videos,” Proc. of the ThematicWorkshops (in conj. with ACM Multimedia Conference), 2017. pp 1-9.

- C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, A. Rabinovich, “Going deeper with convolutions,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, 2015. pp. 1-9.

- A. Krizhevsky, I. Sutskever, G.E. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Advances in Neural Information Processing Systems (NIPS), vol. 25, 2012, pp. 1106–1114.

- A.P. Twinanda, S. Shehata, D. Mutter, J. Marescaux, M. de Mathelin, N. Padoy, “EndoNet: A Deep Architecture for Recognition Tasks on Laparoscopic Videos,” IEEE Trans. on Medical Imaging, vol 36(1), 2016, pp. 86-97.